Gunicorn: A Practical Resource for Backend Developers

Published on February 15, 2026

By ToolsGuruHub

This page is a solid, engineer-to-engineer reference for using Gunicorn to deploy Python web applications in production. It covers what it is, how it works under the hood, where it fits (and where it doesn't), how it pairs with Uvicorn, alternatives you might consider, and real production tips.

What is Gunicorn?

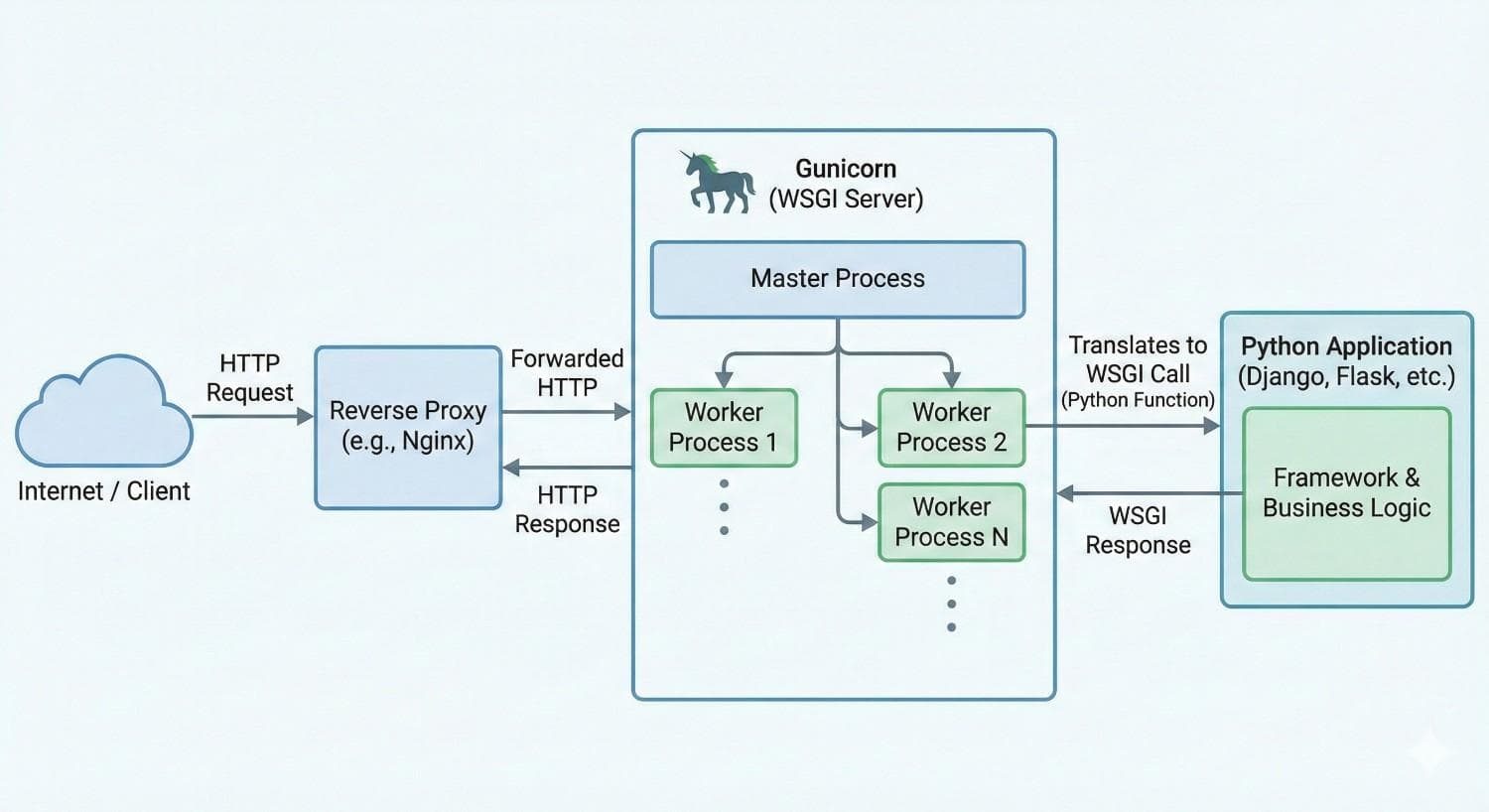

Gunicorn (Green Unicorn) is a pure-Python HTTP server designed to run Python web applications in production. It implements the WSGI interface and manages multiple worker processes to handle incoming HTTP requests efficiently. It's widely used with frameworks like Flask, Django, Pyramid, etc., and can even host ASGI apps when paired with appropriate worker classes like uvicorn.workers.UvicornWorker.

At a high level:

- It listens on a port and forwards requests to worker processes.

- Workers handle requests and return responses.

- The master process (arbiter) manages workers' lifecycle.

In simple terms: Gunicorn is the piece of software that sits between the internet (or your reverse proxy like Nginx) and your actual Python code. It translates HTTP requests into Python function calls that your framework understands. It is a pre-fork worker model server ported from Ruby's Unicorn project—simple to configure, stable, and reasonably fast for most use cases.

What is WSGI?

WSGI (Web Server Gateway Interface) is a simple, synchronous Python spec that defines how web servers and Python apps communicate. It's essentially a callable interface: a server calls your app with a dictionary of environment data and a start_response() callback, and your app returns an iterable response.

Python web frameworks (Django, Flask) cannot speak "HTTP" natively in a way that is robust enough for the open internet. WSGI is the specification that defines a standard interface between web servers and Python web applications:

- Nginx/Apache: Speaks raw HTTP (TCP packets, headers, SSL).

- WSGI Server (Gunicorn): Acts as the translator. It takes the raw HTTP bytes, turns them into a Python dictionary called environ, and calls a callable object (your app).

- Your App: Takes the environ, runs your business logic, and returns a response.

Without a WSGI server, you're stuck using the framework's dev server (e.g. runserver), which is usually single-threaded and not for production.

How Gunicorn Works Internally (Process Model)

Gunicorn uses a pre-fork model borrowed from servers like Unicorn (in Ruby):

- Master (Arbiter) process starts: Binds to a socket (e.g. 0.0.0.0:8000) and manages worker processes.

- Worker processes are forked before handling traffic.

- Workers accept connections, call your app, and send responses back.

- Master monitors workers:

- Restarts workers that die unexpectedly.

- Handles signals (HUP, TTIN, TTOU) for reloads and scaling.

The master does not handle HTTP requests. It only manages workers. If a worker crashes, the master spawns a new one. You can reload code with zero downtime (kill -HUP master_pid): the master spins up new workers with the new code while old workers finish their current requests.

Worker Types Explained

Gunicorn supports several worker classes—choose based on your workload:

| Worker Class | Concurrency Model | Best For |

|---|---|---|

| sync (default) | One request at a time per worker | Simple, CPU-bound or low concurrent loads |

| gthread | One process, multiple threads | I/O-bound with thread safety |

| gevent (async) | Cooperative greenlets (async) | I/O-bound workloads needing high concurrency |

| uvicorn.workers.UvicornWorker | Runs Uvicorn inside Gunicorn | Modern ASGI apps (FastAPI) with async + process management |

- Sync workers are simple and reliable but block on long requests.

- Threaded workers can handle multiple requests in one process but need thread-safe code.

- Async workers (gevent/eventlet) can support many simultaneous connections but introduce complexity around monkey-patching.

When Should You Use Gunicorn?

Use Gunicorn when you:

- Need a production-ready WSGI server for Flask, Django, or similar.

- Want process management (auto worker restart, reloads, timeouts).

- Are deploying behind a reverse proxy like nginx.

- Need to maximize CPU utilization with multiple worker processes.

It's simple to integrate with systemd, supervisord, Kubernetes, and Docker. It is designed to hide behind Nginx or AWS ALB - not to face the raw internet directly.

When Should You NOT Use Gunicorn?

Skip Gunicorn if:

- You don't need process management (e.g. simple dev servers).

- You're only using a single, simple async endpoint and prefer to manage scaling through container orchestration.

- You want HTTP/3 support - you'll need Hypercorn or another server.

- You are on Windows (Gunicorn is Unix-oriented. Use Waitress on Windows).

- You're serving static files - Gunicorn will block a worker. Use Nginx or a CDN instead.

For pure ASGI workflows, built-in ASGI servers like Uvicorn or Hypercorn can be preferable with a process manager.

How Gunicorn Works with Uvicorn - (Gunicorn + Uvicorn)

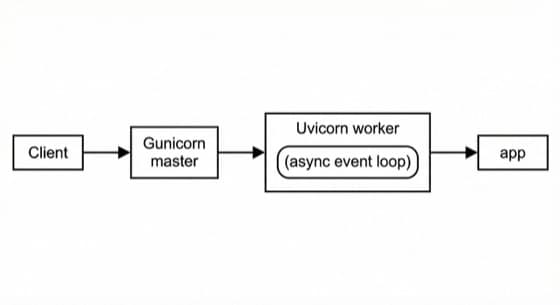

Gunicorn doesn't natively speak ASGI, but it can host an ASGI runtime via a worker class:

gunicorn myapp:app -k uvicorn.workers.UvicornWorker --workers 4

- Gunicorn manages worker processes.

- Each worker runs a full Uvicorn instance handling async requests.

- You get automatic restarts, graceful timeouts, and process supervision.

This combo is the industry standard for ASGI apps in production - Gunicorn as process manager, Uvicorn as the worker handling the async event loop.

Alternatives to Gunicorn

| Alternative | Notes |

|---|---|

| Uvicorn alone | Simple ASGI server; no process manager |

| Daphne | ASGI server from Django Channels |

| Hypercorn | ASGI & WSGI support with HTTP/2, HTTP/3 |

| uWSGI | High-config, advanced server (more complex) |

| Waitress | Pure Python WSGI server, works on Windows; simpler than Gunicorn |

Practical Production Tips

- Use a reverse proxy (e.g. Nginx) in front of Gunicorn for TLS, buffering, and load balancing. Never expose Gunicorn to port 80/443 directly.

- Worker count: Start with

workers = (2 * CPUs) + 1and adjust based on profiling. Don't blindly set 50 workers—more workers mean more RAM and context switching. - Timeouts: Set timeout and graceful_timeout to avoid hung workers. Default is 30 seconds. Don't increase timeout to handle long tasks (e.g. "Generate Report"); move those to a background queue (Celery/RQ).

- max_requests: Recycle workers periodically to limit memory leaks.

- Preload (--preload): Loads app code in the master before forking (saves RAM via copy-on-write). Avoid if your app opens DB connections on import-forking connections is unsafe.

- Loggin: Gunicorn logs to stdout by default. Capture access and error logs in production.

- In Docker/Kubernetes, treat Gunicorn as the entry point and scale horizontally via replicas.

- For async apps, prefer Uvicorn workers + uvloop for performance.

Summary

Gunicorn is battle-tested, minimal, and practical—ideal as a production WSGI server or a process manager for ASGI workloads. It won't solve architectural issues for you, but it gives you a solid foundation for reliable, scalable deployments when paired with good ops practices and monitoring.